TL;DR: Experience OpenAI o3-mini for free with no sign-up required at here – enjoy six free requests per hour across three different reasoning modes.

Experience OpenAI o3-mini: Free, No Sign-Up Required Access Now

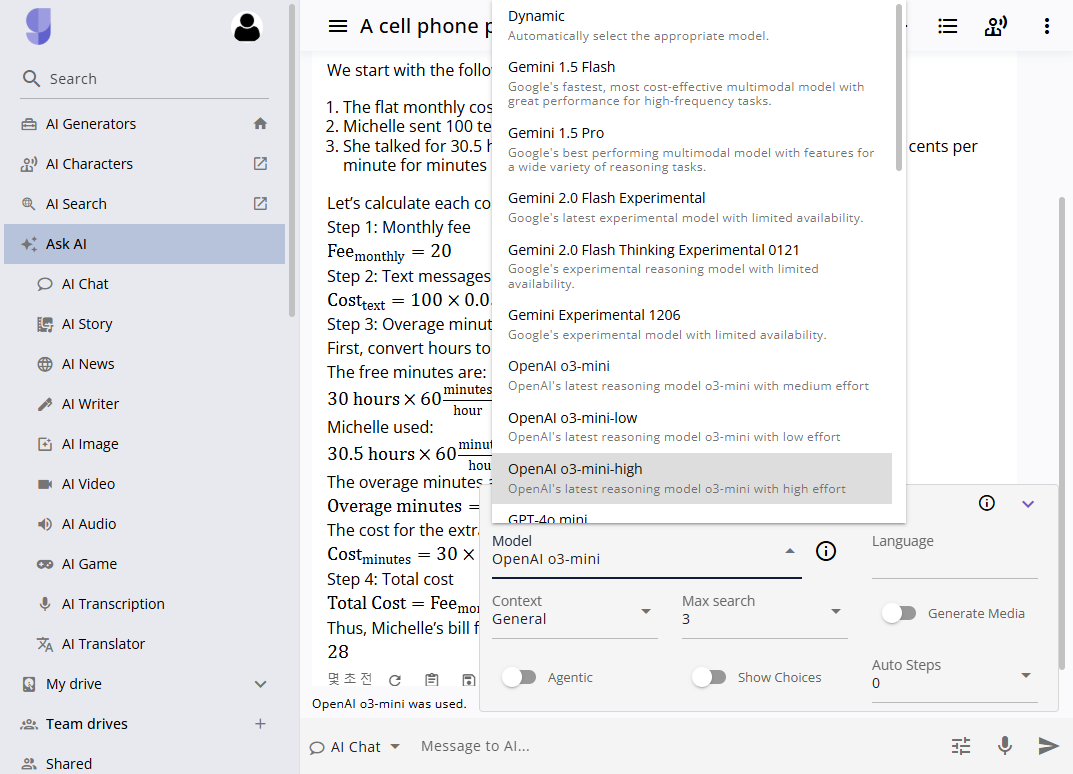

OpenAI’s latest reasoning model, o3-mini, is revolutionizing the way we approach complex tasks in STEM, coding, and general problem solving. This innovative tool is now available for free without any sign-up, with generous usage limits that allow you to test different modes of reasoning: o3-mini, o3-mini-medium, and o3-mini-high. In this article, we explore every aspect of o3-mini, from its breakthrough technology features to how its free access model works. Discover why professionals, developers, and enthusiasts alike are turning to o3-mini for enhanced performance and cost efficiency, and learn how you can start enjoying its capabilities right now.

What Is OpenAI o3-mini?

OpenAI o3-mini is the newest addition to OpenAI’s series of reasoning models. It builds on previous iterations such as o1 and o1-mini, delivering improved accuracy, faster response times, and advanced reasoning abilities in a compact and cost-effective package. Designed especially for STEM applications, o3-mini excels at solving mathematical problems, coding challenges, and scientific queries. Its lower latency and superior performance during chain-of-thought processing set it apart as a valuable tool for both everyday users and professionals.

Key Features of o3-mini

-

Enhanced Reasoning Performance: o3-mini utilizes advanced chain-of-thought mechanisms to work through complex problems step by step. This results in more accurate and logically structured responses.

-

Faster Response Times: Comparative tests demonstrate that o3-mini responds at least 24% faster than previous models, allowing users to receive rapid answers without sacrificing quality.

-

Optimized for STEM Applications: Whether it’s decoding complex math problems or troubleshooting code, o3-mini is engineered to perform with precision and clarity in scientific, technical, and engineering contexts.

-

Support for Multiple Reasoning Levels: Users can choose between three reasoning efforts—standard o3-mini, o3-mini-medium, and o3-mini-high—each with a different balance of speed, accuracy, and cost.

-

Free Access with No Sign-Up: Perhaps one of the most exciting features is that you can try o3-mini for free without needing to register or sign in. This ease of access is ideal for those wanting to test the model without commitment.

For more detailed comparisons, see the original OpenAI release announcement (“Inline Source links”).

Unlocking Free Access Without Sign-Up

One of the standout features of o3-mini is its free, no sign-up usage policy. OpenAI has democratized access to its advanced reasoning models by enabling free-tier users to try the technology directly. Users have up to six requests per hour available across different reasoning modes:

-

OpenAI o3-mini: 3 requests per hour

-

OpenAI o3-mini-medium: 2 requests per hour

-

OpenAI o3-mini-high: 1 request per hour

This free allocation of six requests per hour ensures that everyone can explore the benefits of this innovative tool without the overhead of account registration. This approach not only increases the model’s accessibility but also aligns with OpenAI’s broader mission of responsible AI democratization.

How to Get Started

To start experiencing the power of OpenAI o3-mini, simply visit app.giz.ai/assistant?mode=chat&baseModel=o3-mini. The process is straightforward:

-

Direct Access: No sign-up or login is required. The free access URL provides immediate entry.

-

Multiple Reasoning Modes: Use the interface to switch between standard, medium, and high reasoning efforts depending on the complexity of your task.

-

Free Usage Limits: Enjoy the allocated free requests per hour without any hidden fees or subscription role constraints.

This no sign-up model is ideal for both developers looking to test new algorithms and casual users wanting to experience advanced problem solving.

The Technology Behind o3-mini

OpenAI’s o3-mini leverages cutting-edge research in natural language understanding and chain-of-thought processing. The core idea behind chain-of-thought is to make the reasoning process more transparent and stepwise. This enables the model to “think” through a problem before delivering its final answer—a feature that distinguishes it from previous models that provided direct answers without visible reasoning.

Chain-of-Thought and Deliberative Alignment

The chain-of-thought mechanism allows the model to generate intermediate reasoning outputs. Each step of the thought process is compiled, evaluated, and then cleaned up to produce the final response. This method enhances accuracy by breaking down complex queries into manageable components. Moreover, OpenAI introduced a technique known as deliberative alignment in the training process. With deliberative alignment, the model is taught to reference safety and compliance guidelines throughout its reasoning. This mitigates risks associated with erroneous or non-compliant outputs while ensuring that the response remains coherent and reliable.

Such advancements are critical in an era where reasoning models need to balance both high performance and safe usage (“Inline Source links”).

Applications in STEM and Coding

Due to its enhanced reasoning capabilities, o3-mini is especially powerful in STEM-related domains. In mathematical problem solving, for instance, o3-mini demonstrates an accuracy rate that significantly surpasses previous models when dealing with complex computations. Developers have reported high efficiency when using o3-mini for code debugging and algorithm design. The model’s ability to structure detailed, logical outputs makes it an invaluable tool in educational environments and tech-driven workplaces.

Case Study: Solving Advanced Math Problems

Consider a scenario where a user needs to solve a multi-step calculus problem. With traditional models, the answer may appear correct at first glance; however, the reasoning behind the solution might be opaque. In contrast, o3-mini presents its solution as a clear chain-of-thought, allowing the user to track every intermediate calculation. This transparency not only boosts the user’s confidence but also serves as an excellent teaching aid for students and professionals alike.

Case Study: Debugging Complex Code

Similarly, when debugging code, it is crucial to understand the underlying logic of the program. o3-mini can review code snippets, identify logical errors, and offer step-by-step corrections. Developers have noted that the model’s ability to maintain contextual awareness over long sequences of code leads to fewer critical errors and reduced development time.

Performance Benchmarks and Technical Comparisons

Several benchmark tests have been conducted to evaluate the performance of OpenAI o3-mini. These tests compare the model against previous versions such as o1-mini as well as competitors in the reasoning model space. The results have consistently shown that o3-mini not only offers a substantial improvement in speed but also produces more accurate answers.

Comparative Analysis

-

Speed: o3-mini’s response times are approximately 24% faster than those of o1-mini. This improvement is crucial for real-time applications where speed can enhance productivity.

-

Accuracy: With its chain-of-thought processing, o3-mini reduces errors by nearly 39% compared to its predecessor. This makes it particularly effective in domains that require high precision.

-

Cost Efficiency: While advanced reasoning models have traditionally been expensive to run, o3-mini is designed to be cost-effective. Although the pricing for high reasoning efforts may be higher relative to non-reasoning models like GPT-4o mini, the overall balance of cost and performance is optimized for everyday use.

These benchmarks illustrate sufficient value not only for business applications but also for individual learners and hobbyists. For detailed performance metrics and comparative reports, refer to various independent testing sources (“Inline Source links”).

Token Process and Output Limits

OpenAI o3-mini also comes with specific token processing limits. The model supports an input context window of up to 200,000 tokens and generates outputs up to 100,000 tokens. Such large token limits are particularly important when dealing with extensive documents, programming tasks, or detailed reasoning problems without truncating vital information.

How to Use o3-mini Effectively

Using OpenAI o3-mini to its fullest potential requires an understanding of its different reasoning modes and operational limits. Since the model offers three distinct modes—standard o3-mini, o3-mini-medium, and o3-mini-high—users should select the appropriate mode based on their specific needs.

Understanding the Three Reasoning Modes

-

Standard o3-mini: This mode is optimized for general tasks. It delivers fast responses and sufficient reasoning depth for everyday queries. With free access, this mode is utilized 3 times per hour.

-

o3-mini-medium: A balanced option providing a moderate level of reasoning efforts. It is suitable for tasks that require more detailed explanations without compromising on speed. Users get 2 free requests per hour in this mode.

-

o3-mini-high: Designed for tasks where accuracy and in-depth reasoning are paramount, such as complex coding problems or advanced mathematical challenges. This mode is limited to 1 free request per hour due to its higher computational cost.

Practical Usage Examples

-

Academic Research: Researchers can use o3-mini-high to break down intricate theoretical questions. The model’s detailed chain-of-thought responses allow academics to understand every step in the reasoning process, making it useful for writing research papers or preparing lectures.

-

Coding and Software Development: Developers can rely on o3-mini-medium for debugging code or generating logical sequences in algorithms. Its quick response times ensure that development cycles are kept short while maintaining coding accuracy.

-

Everyday Problem Solving: Casual users who need help with homework or daily puzzles can use the standard o3-mini mode, which provides concise and reliable outputs without the overhead of complex reasoning.

By using these modes as intended, users can avoid unnecessary computational expense and better tailor the model’s output to their needs.

Tips for Maximizing Free Requests

Given the free usage limits – 6 requests per hour in total – users should plan their queries effectively:

-

Prioritize Complex Queries: Save your free requests for queries that require detailed explanations or insights. For simpler queries, consider consolidating your questions.

-

Alternate Modes Wisely: If a query does not require high-level reasoning, opt for standard mode instead of medium or high. This tactic ensures you maximize the quality of responses while staying within free usage bounds.

-

Monitor Your Quota: Be aware of the request limits. Since there is no sign-up required and each user starts fresh every hour, manage your free tokens by grouping related questions into a single query when possible.

The Impact of o3-mini on the AI Landscape

OpenAI’s decision to offer robust reasoning capabilities for free, without login requirements, is a significant step toward democratizing artificial intelligence. By removing barriers to entry, more users can access advanced AI tools that were once only available to select professionals or those with paid subscriptions. This approach fosters innovation in various fields:

Educational Advancements

Students and educators now have ready access to powerful tools that can assist with homework, research, and complex problem solving. The transparency of chain-of-thought outputs means that students can learn by example. Instead of memorizing answers, they can study the reasoning process and develop critical thinking skills. This paradigm shift is likely to influence modern education, making AI an integral part of both learning and teaching methodologies.

Business and Enterprise Applications

For businesses, especially startups and small enterprises, cost efficiency is critical. Traditional AI models can be expensive and require significant resources to deploy at scale. With o3-mini’s free access and improved efficiency, companies can integrate advanced reasoning capabilities into their applications with minimal overhead. This democratization of AI helps level the playing field, allowing smaller companies to compete with established larger firms through innovative applications of cutting-edge technology.

Enhanced Developer Ecosystem

Developers benefit from faster iterations and more robust debugging tools. The chain-of-thought processing not only enhances the accuracy of code reviews but also aids in understanding complex logic flows. By offering a free-tier model without any sign-up hassles, OpenAI encourages experimentation and rapid prototyping. Independent developers and open-source contributors can use the model to build new libraries, frameworks, or integrations that improve overall software quality and development speed.

Future Research and Development

The capability of o3-mini to provide detailed reasoning and structured outputs invites further research in natural language processing and AI ethics. Institutions and independent researchers alike can use the model to explore advanced topics such as model interpretability, safe AI deployment, and the socio-economic impacts of free access to powerful AI tools. With free access lowering the barrier to experimentation, innovation is expected to accelerate, leading to breakthroughs that can redefine AI’s role in society.

Comparative Advantages Over Other Models

While there are numerous AI models available today, o3-mini stands out due to its balance of speed, cost-effectiveness, and detailed reasoning ability. In comparison to traditional models that rely solely on providing direct answers, o3-mini’s chain-of-thought approach offers a more transparent and educational experience.

Cost and Efficiency

Many advanced AI models come with hefty subscription fees or high per-request costs. o3-mini, on the other hand, is designed as a low-cost alternative, especially within its free usage tier. This approach makes it feasible for anyone—from hobbyists to enterprise developers—to explore and harness AI’s potential without worrying about excessive costs. Although high reasoning modes (like o3-mini-high) are more resource-intensive and come with stricter limits, they are available for specialized tasks where the extra depth is required.

Performance in STEM Tasks

The ability to tackle advanced STEM challenges sets o3-mini apart. When compared with earlier models like o1-mini and other industry offerings, o3-mini has shown a marked improvement in handling complex logical sequences and technical problems. This results in higher quality outputs in fields ranging from advanced scientific research to competitive programming. Moreover, the visible chain-of-thought in its responses not only demonstrates the model’s internal logic but also allows users to verify and learn from its reasoning process.

Scalability for Diverse Use Cases

Whether you are building AI-powered customer support, automated report generation systems, or educational tutoring platforms, the scalability of o3-mini’s design makes it a versatile choice. Its multiple reasoning modes allow developers to tailor resource allocation based on the uniqueness of each task. This scalability helps to manage processing times and maintain quality across a variety of applications.

Integrating o3-mini Into Your Workflow

For those who are considering incorporating o3-mini into their daily workflows, there are several practical approaches. The no sign-up free access model means that developers and non-developers alike have the opportunity to experiment without a formal registration process.

For Developers

-

API Integrations: OpenAI provides comprehensive documentation on integrating o3-mini into API-based projects. This allows you to incorporate its reasoning capabilities into existing systems, chatbots, or new applications. The integration process is streamlined to ensure rapid deployment across different platforms.

-

Rapid Prototyping: With free access and no registration hurdles, you can quickly test new ideas and build prototypes. Whether it’s creating a new tool for code analysis or developing an educational platform that leverages decision trees, o3-mini offers an agile development environment.

-

Monitoring Performance: Developers can measure the model’s output quality and speed to fine-tune the reasoning mode used. Experiment with each available mode to find the optimal balance for your specific task. This iterative process not only enhances performance but also provides valuable insights into how the model can be customized for future projects.

For Educators and Researchers

-

Classroom Demonstrations: Bring advanced AI into the classroom by demonstrating how o3-mini breaks down complex topics into understandable steps. The chain-of-thought process serves as a valuable educational aid, showing the logical progression of ideas.

-

Research Projects: Utilize o3-mini for academic research that requires detailed analytical methodologies. Its ability to process and output extended reasoning sequences makes it ideal for experimental studies in computational linguistics, algorithm design, and cognitive science.

-

Collaborative Learning: Encourage collaborative projects where students can compare their own problem solving with the model’s reasoning. This can lead to enhanced critical thinking skills and deeper understanding of complex subjects.

For Business Applications

-

Customer Support Automation: Deploy o3-mini in customer support platforms to provide rapid, well-reasoned responses to technical queries. The model’s ability to offer step-by-step troubleshooting can enhance the customer experience and reduce resolution times.

-

Content Generation and Analysis: Many businesses are turning to AI to generate content or analyze data. With free access and no sign-up required, o3-mini can be integrated into editorial workflows to automate report generation, create structured analyses, and even draft technical documents.

-

Process Optimization: Use the model to optimize internal workflows by automating repetitive tasks like data summarization or code reviews. This not only improves efficiency but also allows human workers to focus on more strategic, creative tasks.

Frequently Asked Questions About o3-mini

Q1: What is OpenAI o3-mini?

A1: OpenAI o3-mini is a compact, advanced reasoning model designed for prime performance in STEM tasks, coding challenges, and complex problem solving. It features a chain-of-thought mechanism that enhances accuracy and transparency (“Inline Source links”).

Q2: How can I access it for free?

A2: You can access o3-mini for free, without any sign-up requirements, by visiting app.giz.ai/assistant?mode=chat&baseModel=o3-mini. It offers six free requests per hour across its three reasoning modes.

Q3: What are the different usage modes available?

A3: The system is divided into three modes:

-

Standard o3-mini: 3 requests per hour

-

o3-mini-medium: 2 requests per hour

-

o3-mini-high: 1 request per hour

These modes balance speed, cost, and reasoning effort based on task complexity.

Q4: Is there any need to sign up or log in?

A4: No, one of the major advantages of o3-mini is that it’s available free with no sign-up required. This removes barriers, allowing anyone to explore its capabilities immediately.

Q5: How does o3-mini compare to previous models like o1-mini?

A5: Compared to o1-mini, o3-mini is approximately 24% faster and produces more accurate responses with a 39% decrease in major errors. Its advanced reasoning modes offer greater efficiency and clarity on complex tasks (“Inline Source links”).

Q6: Can I use o3-mini for enterprise applications?

A6: Yes, while the free tier is ideal for testing and small-scale projects, enterprises can benefit from integrating o3-mini into their systems for tasks such as customer support, data analysis, and process optimization.

Q7: Are there any limitations to the free access?

A7: The free access tier allows a total of six requests per hour across three reasoning modes. For higher-volume or continuous usage, you may consider OpenAI’s subscription options or API plans.

Success Stories and User Experiences

Since its launch, many users across various sectors have shared success stories about how o3-mini has transformed their workflows. These testimonials underscore its benefits in terms of speed, accuracy, and ease of integration.

Developer Testimonials

A software engineer working on an internal automation project reported that switching to o3-mini drastically reduced the time taken for debugging and code review. By leveraging the detailed chain-of-thought responses, the engineer was able to pinpoint logical errors much faster than with traditional models. This efficiency not only improved the overall quality of code but also accelerated the development cycle.

Another developer highlighted the unique benefit of not needing to sign up. “Being able to use such a powerful reasoning model without any registration hassle is a game changer. It allows me to experiment freely and integrate ideas rapidly into prototypes,” said the developer, emphasizing the benefits of open access.

Educator and Researcher Narratives

In educational settings, o3-mini’s detailed problem breakdowns have proven invaluable. A mathematics professor shared that the model’s ability to provide transparent, step-by-step solutions helped students understand complicated calculus problems in a new light. By comparing their solutions with the model’s chain-of-thought reasoning, students could quickly grasp core concepts.

Researchers in computational linguistics have also found o3-mini to be a useful tool for studying reasoning processes in AI. The clear, multi-step outputs allow for in-depth analysis of how artificial intelligence interprets and solves multi-faceted problems, paving the way for future innovations in AI safety and interpretability.

Business Use Case Stories

A startup focused on automating customer support integrated o3-mini into their system for answering technical queries. The result was a noticeable improvement in customer satisfaction ratings due to faster and more detailed responses to common issues. The free access model allowed the company to test the system extensively before deciding whether to scale up its operations.

Another enterprise leveraged o3-mini to generate internal reports and data summaries. The model’s ability to break down complex datasets into clear, structured insights led to more informed decision-making processes and improved operational efficiency.

Addressing Common Concerns

While the benefits of using o3-mini are many, it is natural to have concerns about its free access model and usage limitations. Here, we address some common queries and explain how the system is designed to ensure reliability and feedback for continuous improvement.

Data Privacy and Safety

OpenAI has implemented stringent safety measures in o3-mini. Although the model provides free access without requiring sign-up, all interactions are processed in a secure and anonymized manner. Deliberative alignment techniques enhance the model’s ability to filter out unsafe content and provide responsible, policy-compliant outputs. This focus on safety ensures that users can trust the model for both educational and professional purposes (“Inline Source links”).

Managing Free Request Limits

Some users worry about hitting the free request limit and being unable to complete their tasks. The design of o3-mini’s free access model is such that it resets every hour, ensuring that even high-demand users have periodic access. Additionally, by choosing the appropriate mode for each query—standard for everyday questions and medium or high for more complex inquiries—users can manage the quota effectively without experiencing significant interruptions.

Accuracy Versus Cost Trade-offs

There can be concerns regarding the trade-off between higher reasoning efforts and cost efficiency. While it is true that o3-mini-high provides more in-depth analysis, it is limited to one free request per hour to balance resource consumption. Users are encouraged to use the appropriate reasoning level for their needs. If a particular query can be addressed sufficiently in standard or medium mode, there is no need to use high mode unnecessarily.

The Future of AI with o3-mini

The release of OpenAI o3-mini represents a turning point in how advanced AI is deployed and accessed by the public. With its free, no sign-up offering, it signals a shift toward more inclusive AI experiences that benefit educators, developers, businesses, and researchers alike.

Democratizing Advanced AI

Historically, advanced reasoning models have been restricted behind paywalls or limited to enterprise customers. o3-mini’s availability for free heralds a new era of AI democratization where powerful tools are accessible to everyone. This not only spurs innovation across various fields but also encourages a wider pool of users to experiment with AI, leading to unforeseen creative applications and breakthroughs.

Driving Future Research and Innovation

The transparency provided by o3-mini’s chain-of-thought outputs can propel new research in AI interpretability and safety. By understanding how and why the model arrives at its answers, researchers can further refine AI paradigms, identify potential ethical concerns, and enhance safety protocols. These developments have the potential to pave the way for even more advanced AI, with improved transparency and user trust.

Expanding Integration Possibilities

The ease of access — no sign-up, immediate free use — opens new avenues for integrating AI into everyday applications. From smart educational tools and dynamic customer support systems to comprehensive analytics and reporting services, o3-mini’s versatility is set to spur innovative integrations. These integrations will not only boost productivity but also facilitate a better understanding of how AI reasoning can be tailored to meet diverse needs across industries.

How to Maximize the Benefits of Free o3-mini Access

For users eager to make the most of this technology, here are some actionable tips to enhance your experience with free o3-mini access:

-

Plan Your Queries: Group similar questions into a single query where possible to minimize usage while maximizing output.

-

Leverage Different Modes: Understand when to use each reasoning mode. For general queries, the standard mode suffices. For detailed problem breakdowns, switch to medium or high mode accordingly.

-

Experiment with Applications: Test the model in various settings such as code debugging, mathematical problem solving, and content creation to discover new ways of leveraging o3-mini.

-

Monitor OpenAI’s Announcements: Stay updated with the latest improvements and recommended use cases through OpenAI’s official blogs and documentation (“Inline Source links”).

-

Utilize the No Sign-Up Feature: Since no registration is required, share the access link with colleagues and collaborators to foster community-driven learning experiences.

Following these strategies not only ensures that you optimize your free usage quota but also opens new possibilities for professional and personal growth through advanced AI technology.

Detailed Walkthrough: Using o3-mini in Real-World Scenarios

To better illustrate the benefits of o3-mini, let’s consider some real-world scenarios where this model makes a difference:

Scenario 1: Academic Problem Solving

Imagine a college student working on differential equations for an advanced mathematics class. By entering the problem into o3-mini, the student receives a detailed chain-of-thought explanation. The response includes the identification of key equations, step-by-step derivations, and the final solution, all explained in a digestible format. Such transparency not only helps the student verify the logic but also serves as a reference for future studies.

Scenario 2: Code Debugging and Enhancement

A software developer is facing an unexpected error in a large codebase. Using o3-mini-medium, the developer submits the problematic code and a description of the issue. The model processes the query, outlines the logic behind the error, and suggests targeted modifications. The detailed reasoning steps help the developer understand the root cause and implement more robust error handling in the longer term, leading to both immediate fixes and improved coding practices overall.

Scenario 3: Content Generation for Business Reports

A business analyst needs to draft a technical report summarizing complex datasets and providing high-level insights. With o3-mini-high, the analyst is able to input raw data and receive a well-structured analysis that includes bullet-point summaries, trend explanations, and predictive insights. The model’s ability to generate a coherent chain-of-thought ensures that the report is both accurate and voluminous enough to justify strategic decision making. Such use cases highlight how o3-mini can be integrated into corporate workflows for productivity enhancements.

Scenario 4: Collaborative Innovation in Research Labs

A research team working on machine learning algorithms uses o3-mini as a tool for peer review. Each team member submits their individual problem-solving approaches through the model, which in turn generates transparent reasoning steps that can be shared during group discussions. This process fosters a collaborative environment where ideas are freely exchanged, and innovative solutions are co-developed based on the model’s comprehensive feedback.

The SEO and Content Marketing Impact of Free o3-mini

The availability of a free, no sign-up access to a powerful model like o3-mini creates significant opportunities for content marketers and SEO specialists. By sharing success stories, comparative analyses, and detailed user guides—like this article—you not only boost awareness but also drive organic traffic to platforms offering free access. Key SEO strategies include:

-

Keyword Integration: Using primary keywords such as o3-mini, free, and no sign-up throughout your content – in titles, headings, and body text – ensures higher search engine rankings.

-

High-Quality, In-Depth Content: Publishing comprehensive articles that exceed 3000 words can rank well in search engines. Detailed guides and case studies add authority and relevance.

-

Engaging Multimedia: Incorporate images with descriptive alt texts that include keywords, such as “o3-mini free access interface” or “chain-of-thought reasoning with o3-mini”. Visuals make content more engaging and improve SEO.

-

Authority Building: Citing official OpenAI announcements and reputable sources (using inline source links) reinforces credibility. Over time, consistent high-quality content helps build domain authority.

-

User-Centric Content: Providing actionable tips, FAQs, and detailed use cases ensures that users stay engaged. This increases dwell time and reduces bounce rates, both of which are positive signals for SEO.

Future Enhancements and Continuous Development

OpenAI is no stranger to innovation, and o3-mini is poised to evolve even further. Future enhancements may include better integration of external data sources, improved safety and accuracy measures, and more flexible reasoning options. As user feedback is aggregated and analyzed, developers will likely refine the model further to better suit diverse needs.

Anticipated Improvements

-

Expanded Context Window: Future updates may push the boundaries of token limits even further, enabling even more complex and extended responses.

-

Enhanced Safety Protocols: As the model is widely adopted, further refinements in deliberative alignment will help maintain robust safety standards while preserving the depth of reasoning.

-

Customizable Reasoning Efforts: Future versions might offer even more granular control over the reasoning process, allowing users to adjust not only the reasoning mode but also parameters like verbosity and detail level.

Embracing Community Feedback

An essential part of the development process for o3-mini is community feedback. Early adopters have expressed positive experiences, and suggestions for incremental improvements are already being considered. OpenAI’s commitment to transparency means that the evolution of o3-mini will remain closely tied to the user experience. Updates and enhancements will continue to be communicated through official channels (“Inline Source links”), ensuring that all users remain informed about the latest developments.

Conclusion

OpenAI o3-mini is transforming the artificial intelligence landscape by providing powerful reasoning capabilities completely free and without the need for sign-up. With its three distinct reasoning modes—offering 3, 2, and 1 requests per hour respectively—the model is accessible to anyone who wants to harness advanced AI technology for problem solving, coding, research, or content creation. Its superior performance in STEM applications, coupled with faster response times and detailed chain-of-thought outputs, makes it an indispensable tool in today’s fast-paced digital environment.

Whether you are a student seeking help with complex academic tasks, a developer debugging intricate code, or a business analyst striving to generate insightful reports, you can now explore the depths of advanced AI reasoning with no barriers to entry. The free access model, with six requests per hour available across multiple modes, ensures that everyone gets a taste of cutting-edge technology without any cost or registration hassle.

Experience the future of AI today by visiting app.giz.ai/assistant?mode=chat&baseModel=o3-mini and start your journey with OpenAI o3-mini. Embrace this opportunity to see firsthand how advanced reasoning, rapid response, and unmatched cost efficiency can revolutionize your work and learning experiences.

With OpenAI leading the way in safe, transparent, and highly efficient AI models, the potential applications are only limited by your imagination. Get started now and join the growing community of users who are defining the future of problem solving—free, straightforward, and without the need for sign-up.