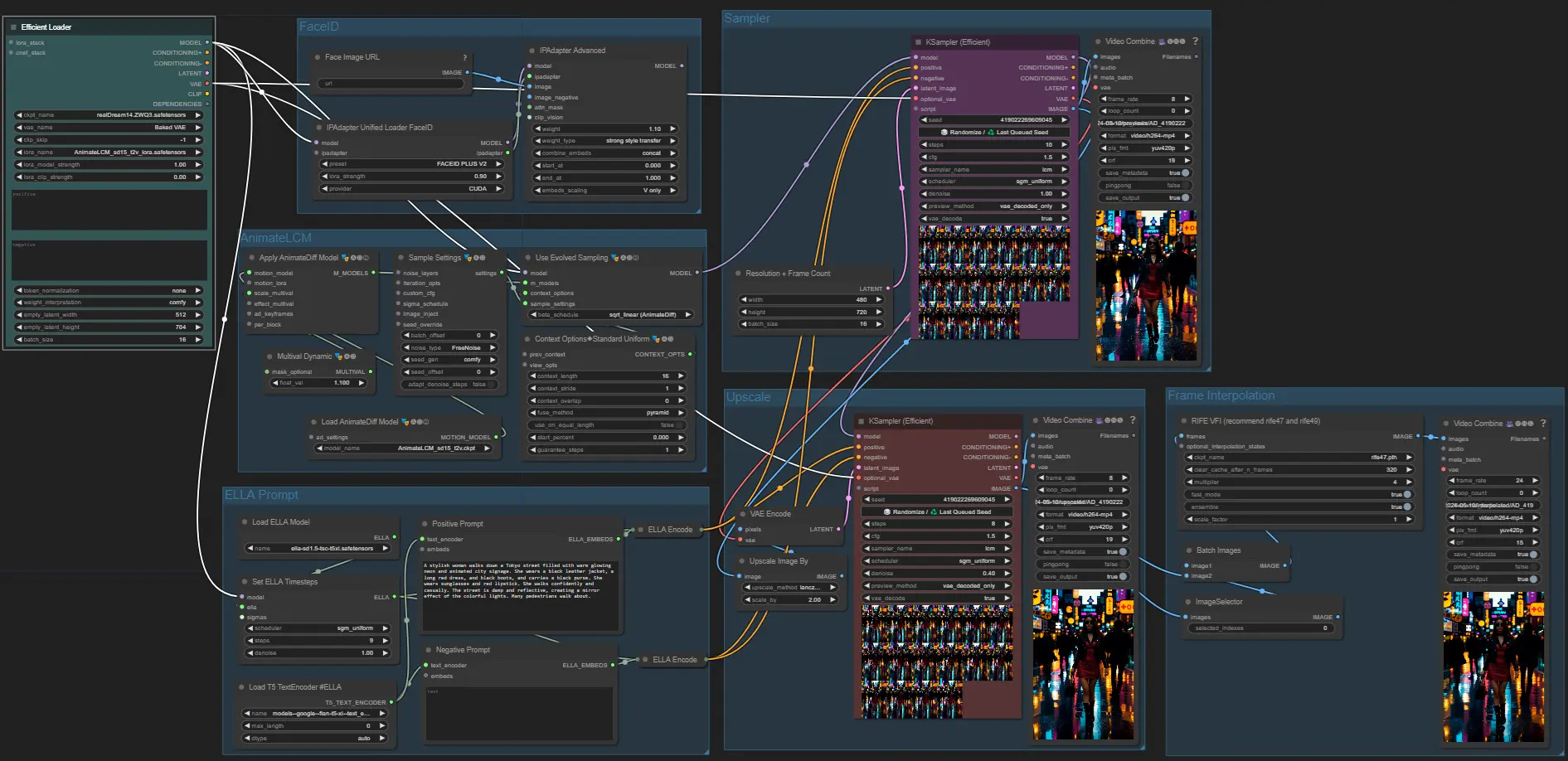

The ComfyUI workflow for GizAI’s AI Face Video Generator has been released on OpenArt Workflows and Github. This workflow integrates the power of the AnimateLCM model with FaceID to create dynamic, realistic face animations directly from text prompts and images.

Key Features

-

AnimateDiff and AnimateLCM enable fast video creation even on low VRAM (tested on RTX 4070 Ti Super with 16G VRAM)

-

Integrated ELLA to improve prompt adherence and animation consistency.

-

Supports video upscaling and frame interpolation.

-

The IPAdapter FaceID Plus v2 can be integrated to apply facial images.

Workflow Screenshot

Sample Video

The prompt used is sourced from OpenAI’s Sora:

A stylish woman walks down a Tokyo street filled with warm glowing neon and animated city signage. She wears a black leather jacket, a long red dress, and black boots, and carries a black purse. She wears sunglasses and red lipstick. She walks confidently and casually. The street is damp and reflective, creating a mirror effect of the colorful lights. Many pedestrians walk about.” Please note that the FaceID feature was not utilized in this particular example.

If you have any questions or issues, please file an issue in the following repository. https://github.com/GizAI/ComfyUI-workflows